Shack–Hartmann Wavefront Sensors

Acronym: SHWFS

Definition: wavefront sensors which are based on a microlens array

Alternative term: Hartmann–Shack wavefront sensor

More general term: wavefront sensors

German: Shack–Hartmann-Wellenfrontsensor

Categories: photonic devices, light detection and characterization

How to cite the article; suggest additional literature

Author: Dr. Rüdiger Paschotta

The Shack–Hartmann (or sometimes Hartmann–Shack) wavefront sensor is the most common type of wavefront sensor, named after Johannes Franz Hartmann and Roland Shack. It can be used for measuring the wavefront shape of incident light, e.g. from an attenuated laser beam or from star light in an optical telescope.

The article also explains the original Hartmann wavefront sensor, an earlier form without the further development of the technology by Shack and Platt.

Operation Principle

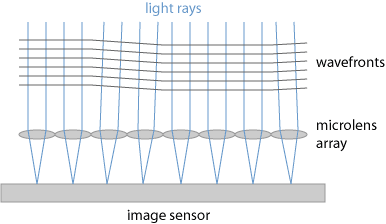

Essentially, the optical setup of the sensor consists of an array of microlenses and an image sensor, which is mounted in the focal plane of the microlens array (and can be called a focal plane array). The original invention by Johannes Franz Hartmann employed a Hartmann mask, which is an array of pinholes instead of the lenses; Roland Shack and Ben Platt introduced the use of lens arrays in the late 1960s, which substantially increased the sensitivity of those devices and thus allows wavefront sensing at much lower intensity levels, which is important e.g. for applications in astronomy. Partially, wave sensors with an Hartman mask (with holes) are still used; these are called Hartmann wavefront sensors.

The operation principle of such a wavefront sensor is fairly simple. Each lenslet of the device focuses incoming radiation to a spot on the sensor (see Figure 1), and the position of that spot indicates the orientation of the wavefronts, averaged over the entrance area of the lenslet.

Some kind of computer is used to calculate the positions of the spots behind all lenslets (lens segments) based on the acquired image and estimates the wavefront distortions over the full entrance area of the sensor based on those data.

Further Details

Calculation of Spot Positions

Due to the finite spatial resolution of the image sensor, one should not simply take the spot position to be corresponding to the pixel receiving the highest optical intensity. A better method would be to take the “center of gravity”, where the coordinates of that center are calculated as first moments of the intensity distribution. That way, the position resolution can be far better than the pixel spacing. More refined computational algorithms, which better suppress noise influences, can provide even more accurate data. Particularly in difficult measurement situations, for example with very large phase excursions or significant noise influences, the quality of the used numerical algorithm can be crucial. One may also try to eliminate or at least reduce any cross-talk, i.e., the influence of light from neighbored lenses.

The operation principle can also be explained with Fourier optics: each lens produces (in a somewhat simplified picture) an intensity profile in the focal plane which is related to the spatial Fourier transform of the complex amplitude distribution of the incident radiation.

The incident radiation does not necessarily have to be monochromatic, even though wavefronts are strictly speaking well defined only then. For polychromatic light, one simply obtains a kind of spectrally averaged wavefront orientation. In many practical cases, different spectral components will actually exhibit very similar wavefront orientations.

The achieved spatial resolution is of course limited by the spacing of the lenslets, because each of one can provide only a single wavefront orientation in two dimensions.

Calculation of Wavefront Distortions

The local wavefront orientation is simple to calculate: when the wavefronts are tilted with an angle θ against the horizontal direction, this causes a shift of the spot position by d tan θ, where d is the distance between the lenses and the sensor surface. So one simply has to divide the calculated spot offset by d to obtain the tangent of the wavefront orientation in the corresponding direction. The distance d will usually be approximately the focal length of the lenses, but it does not strictly have to coincide with that. It may even be advantageous to make the spacing slightly larger or smaller, e.g. if otherwise the spot size on the sensor would be below the pixel spacing, which would make it more difficult to accurately determine the positions.

From the obtained orientations (spatial derivatives of the optical phase), which are defined on a rectangular grid, one may reconstruct the corresponding wavefront field by a kind of integration. Optimized algorithms can be used for minimum sensitivity to measurement noise.

Further Calculations

The overall intensities in the spot pattern also provide information on the optical intensity profile of the incident light. That together with the wavefront orientations can be used to compute the whole complex amplitude profile. Further, from that one can calculate the beam quality of a laser beam, e.g. specified with the M2 factor, or the Strehl ratio. Note that it is sufficient to measure the amplitude profile in one plane – in the beam focus or outside.

Another possibility is to decompose measured optical aberrations according to Zernike polynomials, i.e., to calculate the Zernike coefficients, possibly in real time.

Considerations on Focal Length and Pixel Spacing

A long focal length of the lenses is in principle beneficial for achieving a high resolution for the wavefront orientation. However, it also limits the acceptable angular range for the input light, since the spot offsets may then become too large, leading to cross-talk with neighbored sensor segments. Therefore, it may be better to use a smaller focal length and improve the resolution by using a sensor with smaller pixel spacing.

Possible Artifacts

It has already been mentioned that a cross-talk between neighbored lens elements can occur for too large incidence angles. However, there may also be problems with light scattered e.g. at the edges of the lenslets, or with parasitic reflections. High quality measurement results thus require a good optical quality of the microlens array; optimized data processing with suitable software algorithms can also help substantially.

Problems with Wavefront Steps

A limitation of Shack–Hartmann sensors is that sudden changes of wavefront orientation (wavefront steps) cannot be detected. In the simplest case, one would have to plane wavefronts everywhere, but a step just at the boundary between sensor segments; such a step would have no influence on the observed spot pattern and could therefore not be detected. A step going through the area of a lens segment can have more complicated effects.

Spectral Response

In many cases, the image sensor is a silicon-based CCD or CMOS chip, which has a substantial responsivity for visible and near-infrared light. (Such sensor chips are very common for use in monochrome cameras.) For laser light in the spectral region between 1 μm and 1.1 μm (e.g. Nd:YAG at the common 1064 nm laser line), the responsivity of a silicon detector is already substantially reduced, but usually there is no lack of laser power for obtaining sufficiently strong signals. On the other hand, the relatively higher sensitivity for ambient light can be detrimental, or require one to suppress that more strictly.

Note that the performance of anti-reflection coatings on the microlens array may also limit the usable spectral range. Most devices are made with broadband coatings, so that they are useful in a substantial wavelength range.

More special kinds of image sensors have to be used for other spectral regions, for example for the mid infrared.

Lens arrays are also difficult to realize for extreme spectral regions, for example for the extreme ultraviolet. In such cases, one may have to go back to the original Hartman wavefront sensor, using an array of pinholes (a Hartmann plate) instead of the lens array.

Specifications

The most important specifications of wavefront sensors refer to the following aspects:

- Measurement area (aperture): this is typically a rectangular area, of which the width and height are specified. Typically, those are of the order of 5 mm to 20 mm, but much larger sensors are made e.g. for astronomical applications.

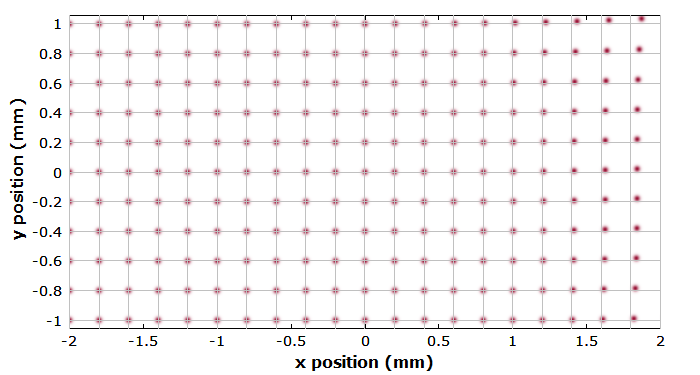

- Spatial resolution: this is determined by the pitch of the lenslets (not by the pixel pitch of the sensor). Typically, this is a few hundred micrometers. Therefore, it will usually be necessary to use some kind of beam expander for a typical laser beam. One may only have e.g. 80 × 50 detector segments, even if the image sensor has more than 1500 × 1000 pixels. Higher numbers of detector segments are required for measuring higher-order Zernike coefficients.

- Angular range: this is essentially limited by the lenslet pitch divided by the focal length of the array.

- Dynamic range concerning the optical phase: this can be far larger than 2π, and much larger than with many interferometers. Note that the instrument does not measure absolute phase values, but derivatives of the phase with respect to transverse coordinates. By integration, one can obtain rather large phase excursions, as long as those are not too rapid.

- Dynamic range concerning the optical input power: note that a sensor should never be saturated, but also not be operated with too low optical input powers. (Ideally, the instrument displays during operation whether the peak intensities are indisputable range.) In practice, one may have to use a variable optical attenuator for adjusting the optical input power – but of course without introducing substantial additional wavefront distortions. Influences of ambient light can further reduce the effectively available dynamic range.

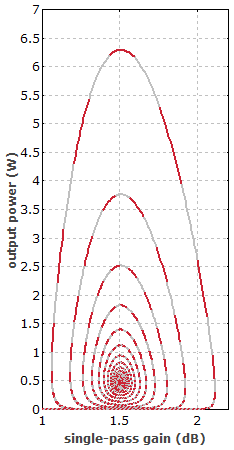

- The wavefront accuracy is often specified as a fraction of the optical wavelength. It may be λ/10 for simple devices or of the order of λ/100 for particularly precise sensors. Note that the wavefront accuracy may not be as high as the wavefront sensitivity: one may be able to detect small wavefront changes without being able to accurately quantify the values. Also, the highest measurement accuracy may be achieved only with some averaging, which can reduce the achieved measurement speed in terms of measurements per second or (for rolling averages) just making the response to wavefront changes slower.

- The measurement speed is typically specified as the number of measurements per second; it is also called a frame rate and given with units of frames per second (fps). Note however, that the achieved frame rate may be significantly lower than the maximum frame rate of the used image sensor, because the computations may take some time. Fast wavefront sensors can provide thousands of wavefront shapes per second. Large measurement areas typically come together with lower frame rates.

Further practical aspects of interest may be the geometrical dimensions of the measurement head, mounting options, the device used for data processing (e.g. internal electronics or a PC software), the flexibility of the used software, the electronic and/or software interfaces, and the requirements concerning calibration procedures.

Some devices allow one to exchange the microlens array, so that one can do measurements with different lenslet pitches. For some measurements, one may e.g. prefer an array with a low pitch for high spatial resolution, even if that reduces the angular range and possibly the obtained wavefront accuracy.

Applications of Shack–Hartmann Wavefront Sensors

Astronomical Telescopes

Shack–Hartmann wavefront sensors are frequently used in the context of adaptive optics, in particular for astronomical telescopes. They are needed to measure the wavefront orientation e.g. of light from a distant star or a laser guide star; the results are utilized for correcting the wavefront orientation with a deformable mirror.

Characterization of Optics

As an example, one may characterize the optical aberrations of lenses or lens systems (objectives) in transmission. A test laser beam is sent through the lens under test under suitable conditions (e.g. with an incident collimated beam or a strongly divergent beam), and the transmitted beam is sent to the wavefront sensor after being conveniently transformed with a few additional optical elements, such that approximately plane wavefronts would arise (in conjunction with a suitable beam radius) for a tested lens without aberrations. That way, one avoids excessive phase excursions at the sensor, which would be difficult to measure.

Other setups work in reflection. For example, one sends a laser beam with approximately flat wavefronts to and approximately flat tested mirror surface and analyzes the wavefronts of the reflected light. One may also test a lens, placed on a flat mirror, and use an additional high quality lens which provides approximately flat wavefronts before going to the wavefront sensor.

There are wavefront measurement systems which besides the wavefront sensor contain a light source (often a visible or near-infrared laser), various objects such as lenses and beam splitters, a flexible mount for optical components to be tested, and a computer system for processing the data and displaying the results.

Ocular Diagnostics

Another application is in ocular diagnostics concerning optical aberrations of the eye. For example, one may use a near-infrared laser to produce a small illuminated spot on the retina and analyze the wavefront orientation of light which goes through the eye's lens to the wavefront sensor. That way, one can directly measure the aberrations, e.g. to find out the amount of astigmatism which needs to be corrected with prescription glasses. Similarly, the shape of the cornea can be measured by using light which is reflected there.

One can also use such wavefront sensors for ocular imaging, where one can obtain much improved image quality and spatial resolution by correcting measured wavefront distortions with a deformable mirror or a liquid crystal modulator. Optical coherence tomography can also be combined with adaptive optics for improved lateral resolution.

Laser Beam Characterization

Wavefront sensors can also be used for the characterization of laser beams. In contrast to devices which only measure intensity profiles, for example to quantify the beam quality with the beam parameter product or M2 factor, wavefront sensors can be used for obtaining the whole optical phase profile. When there's obtains more comprehensive information, which possibly helps one to identify the cause of possible beam quality deteriorations.

Comparison with Other Technologies

Various types of interferometers, for example Twyman–Green interferometers, are also often used to measure phase aberrations. They can also often provide higher transverse resolutions and work in wider ranges of areas. However, the possible range of phase excursions can typically be substantially higher with the Shack–Hartmann sensor.

Suppliers

The RP Photonics Buyer's Guide contains 11 suppliers for Shack--Hartmann wavefront sensors.

Questions and Comments from Users

Here you can submit questions and comments. As far as they get accepted by the author, they will appear above this paragraph together with the author’s answer. The author will decide on acceptance based on certain criteria. Essentially, the issue must be of sufficiently broad interest.

Please do not enter personal data here; we would otherwise delete it soon. (See also our privacy declaration.) If you wish to receive personal feedback or consultancy from the author, please contact him e.g. via e-mail.

By submitting the information, you give your consent to the potential publication of your inputs on our website according to our rules. (If you later retract your consent, we will delete those inputs.) As your inputs are first reviewed by the author, they may be published with some delay.

See also: wavefronts, microlenses, microlens arrays

and other articles in the categories photonic devices, light detection and characterization

|

If you like this page, please share the link with your friends and colleagues, e.g. via social media:

These sharing buttons are implemented in a privacy-friendly way!